If you are searching for how to use ai models locally, you came to right place , This blog post aims to demystify Ollama, highlighting its key features and providing a step-by-step guide on how to use it effectively.

What is Ollama

In simple term ollama is computer application that helps to run Large Language Models to run locally.

Ollama helps to learn different ai model to run on local computer system, it helps to run models like:

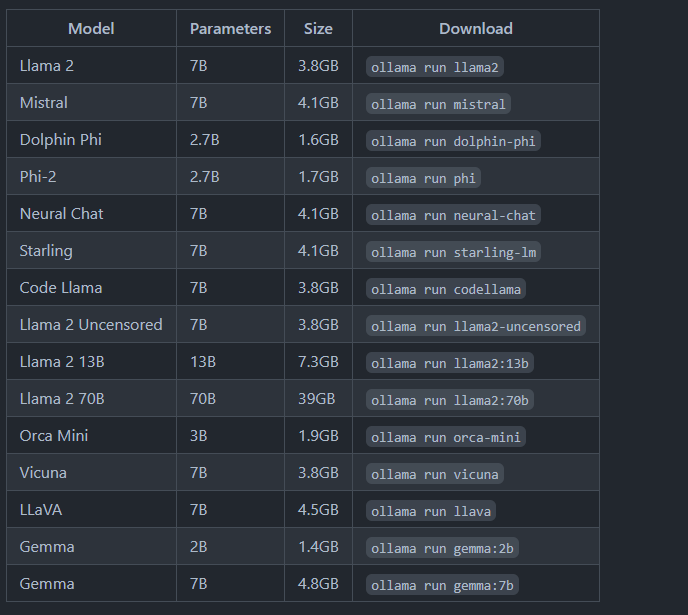

| Model | Parameters |

| Llama 2 Mistral Dolphin Phi Phi-2 Neural Chat Starling Code Llama Llama 2 Uncensored Llama 2 13B Llama 2 70B Orca Mini Vicuna LLaVA Gemma Gemma | 7B 7B 2.7B 2.7B 7B 7B 7B 7B 13B 70B 3B 7B 7B 2B 7B |

Quick Note: To operate the 7B models, ensure you have a minimum of 8 GB of RAM. For the 13B models, you’ll need at least 16 GB of RAM, and to run the 33B models, you should have 32 GB of RAM available.

How to install and setup Ollama AI Locally :

You can install Ollama locally on macOS and windows by downloading from below source.

MacOS: https://ollama.com/download/Ollama-darwin.zip

Windows: https://ollama.com/download/OllamaSetup.exe

How to pull new Models with Ollama

To get started with new AI LLM model you can simply use below command:

ollama pull llama2You can also pull models via API, for example, install to Code Llama2 :

curl -X POST http://127.0.0.1:5050/api/pull -d '{"model": "codellama:34b", "name": "codellama:34b"}'After pulling latest model you can simply use it via command line with command:

ollama run [your installed model name]

example:

ollama run llama2

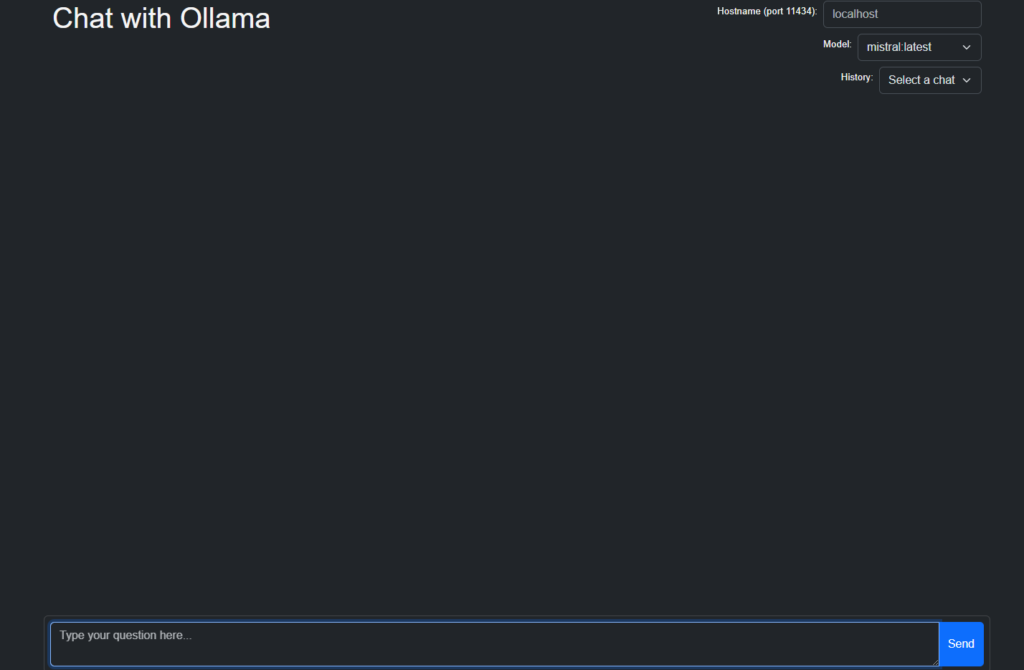

Alternatively you can use chrome extension of chat- with ollama

extension url: https://chromewebstore.google.com/detail/ollama-ui/cmgdpmlhgjhoadnonobjeekmfcehffco

How to remove model from ollama

Run the below command to remove any LLM model from ollama:

ollama rm <YOUR MODEL NAME>

example:

ollama rm llama2Conclusion

In conclusion, Ollama presents an accessible solution for utilizing AI models locally, offering a diverse range of models such as Llama 2, Mistral, Dolphin Phi, Phi-2, Neural Chat, Starling, and more. This blog post has endeavored to demystify Ollama, shedding light on its functionality and providing a comprehensive guide on how to effectively deploy it. The availability of various models caters to different needs, with recommendations on system requirements for optimal performance. Installation and setup instructions have been provided for both macOS and Windows operating systems, ensuring accessibility for a wide user base. Additionally, the process of pulling new models via command line or API has been elucidated, offering flexibility and ease of integration. Overall, Ollama stands as a valuable tool for leveraging AI capabilities locally, empowering users to explore and utilize advanced language models with convenience and efficiency.

Also Read: Phind AI : The only developer focused AI GPT that you should must try